Location: Universidad Politécnica de Madrid (UPM) > Grupo de Tratamiento de Imágenes (GTI) > Data > CamLocalizationMaps

Description:

This site contains an exhaustive description of the test data used to evaluate the system for camera positioning proposed in [*] since, due to page length limitations, the said manuscript can only contain graphical descriptions for part of the evaluated settings.

In greater detail, the work [*] proposes a new Bayesian framework for automatically determining the position (location and orientation) of an uncalibrated camera using the observations of moving objects and a schematic map of the passable areas of the environment. This approach takes advantage of static and dynamic information on the scene structures through prior probability distributions for object dynamics. The proposed approach restricts plausible positions where the sensor can be located while taking into account the inherent ambiguity of the given setting. The proposed framework samples from the posterior probability distribution for the camera position via Data Driven MCMC, guided by an initial geometric analysis that restricts the search space. A Kullback-Leibler divergence analysis is then used that yields the final camera position estimate, while explicitly isolating ambiguous settings. The evaluation of the proposal, performed using both synthetic and real settings, shows its satisfactory performance in both ambiguous and unambiguous settings.For any question about the article [*] or about the described test data, please contact Raúl Mohedano at rmp@gti.ssr.upm.es.

Citation:

[*] R. Mohedano, A. Cavallaro, N. García, “Camera localization using trajectories and maps”, IEEE Trans. Pattern Analysis and Machine Intelligence, vol. xx, no. x, pp. xxx-xxx, xxx 2013. (under review).

Experimental setup description:

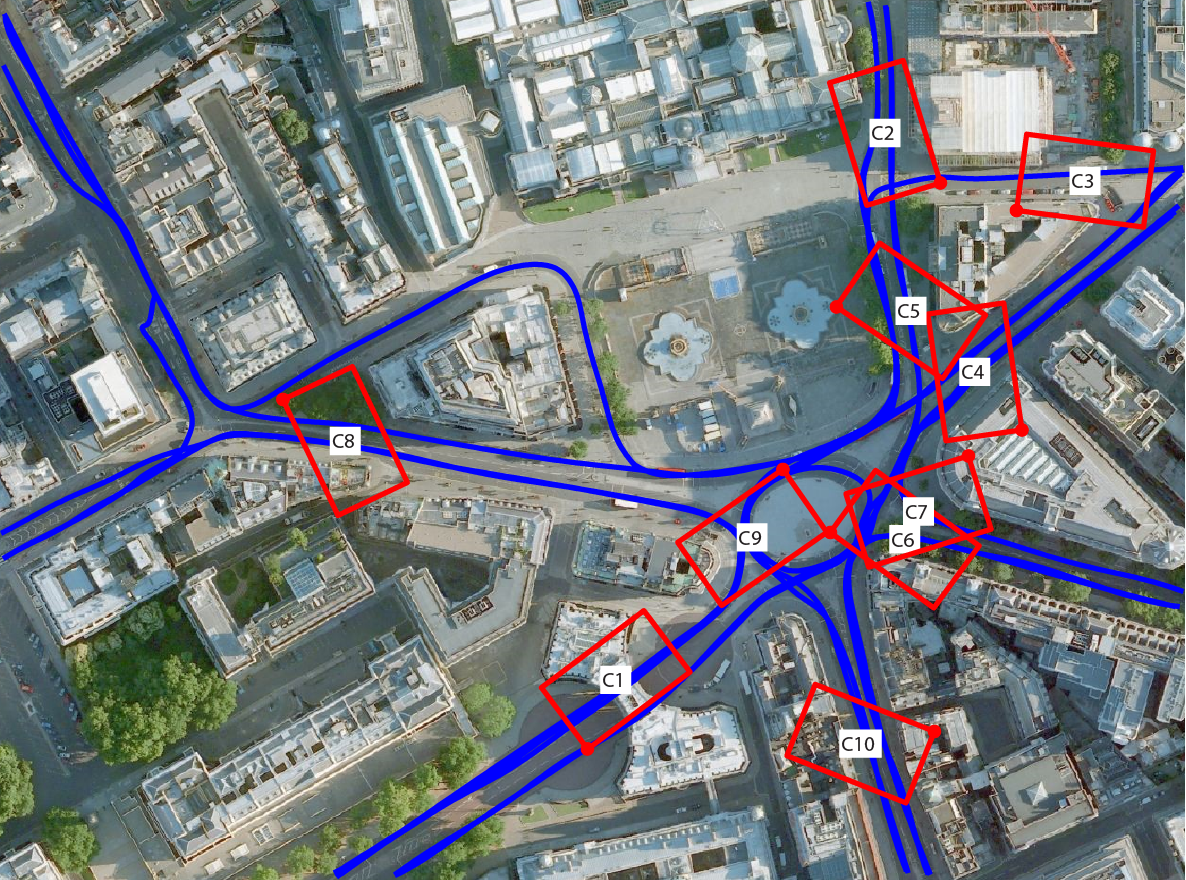

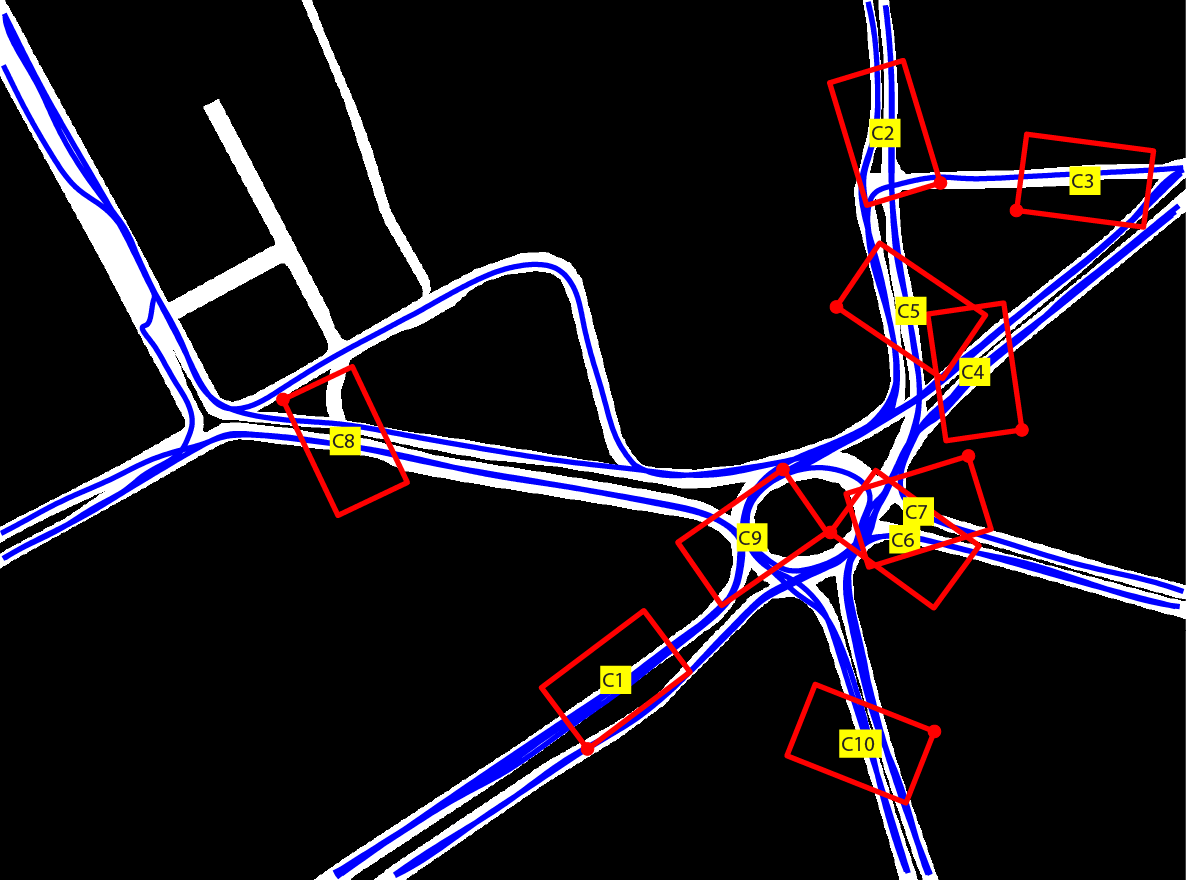

Synthetic database (cameras C1 to C10):

- Real map of Trafalgar Square area, London (United Kingdom)

- 500 x 400 m

- Resolution: 0.33 m/pix

- 20 semi-automatically generated trajectories

- 10 cameras randomly generated

- Rectangular FoV of size 50 x 35 m

- Observed tracks: between 4-10 per camera, 5 fps

Complete environmental map with true camera FoVs and synthetic routes superimposed |

||

|

|

|

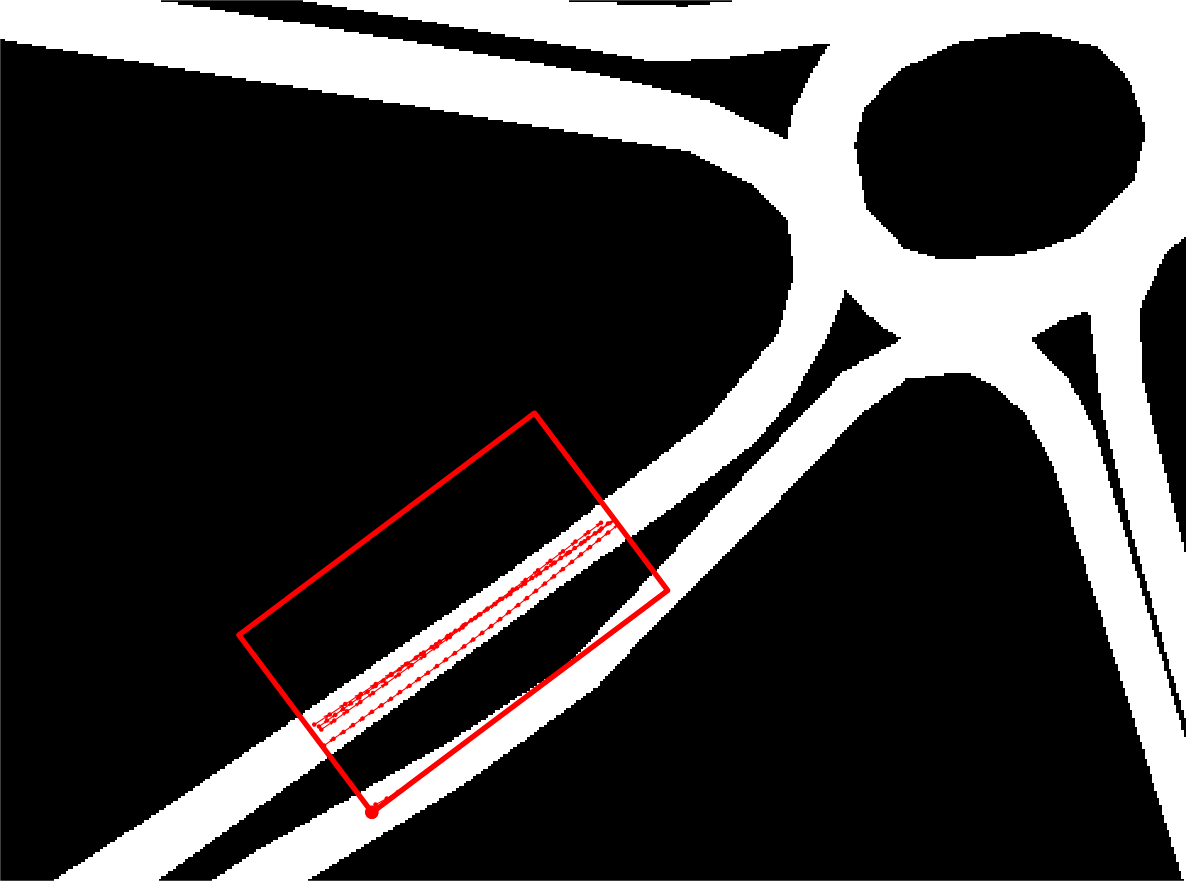

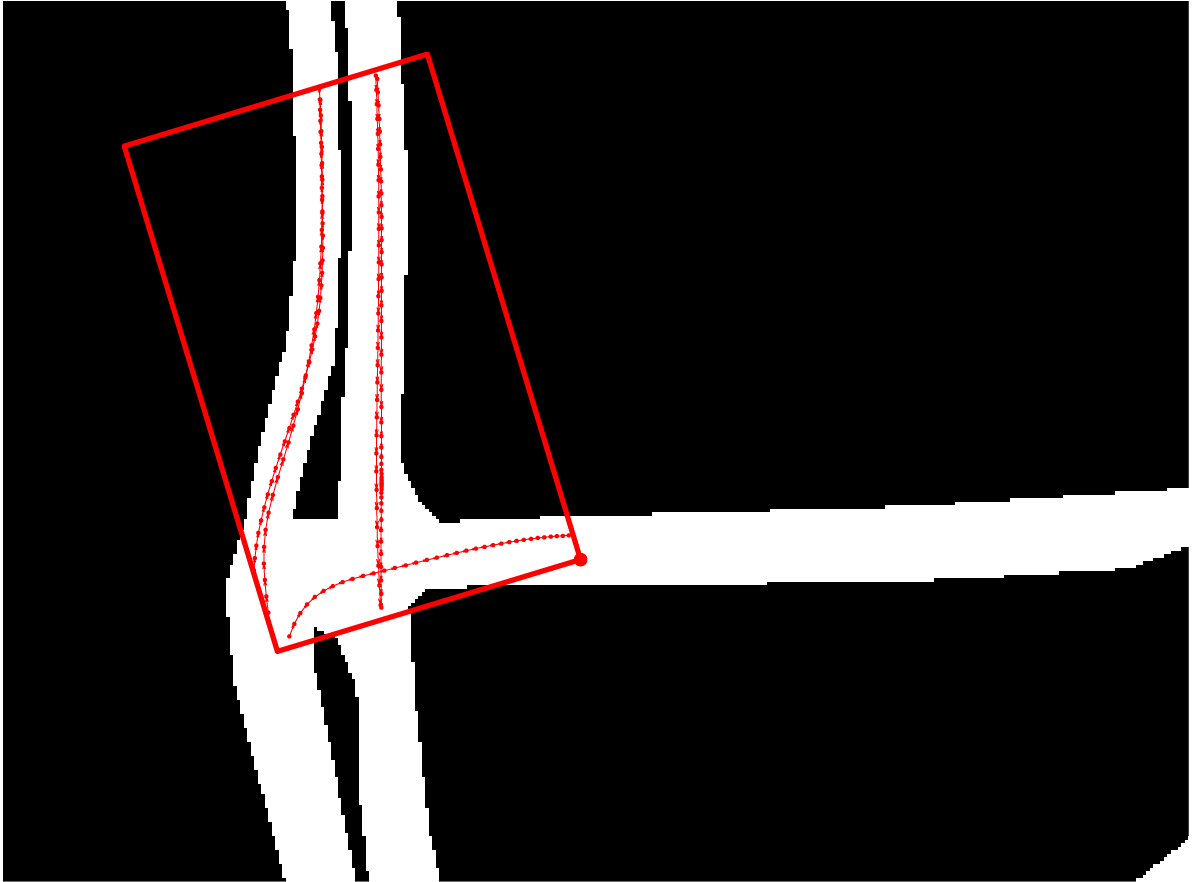

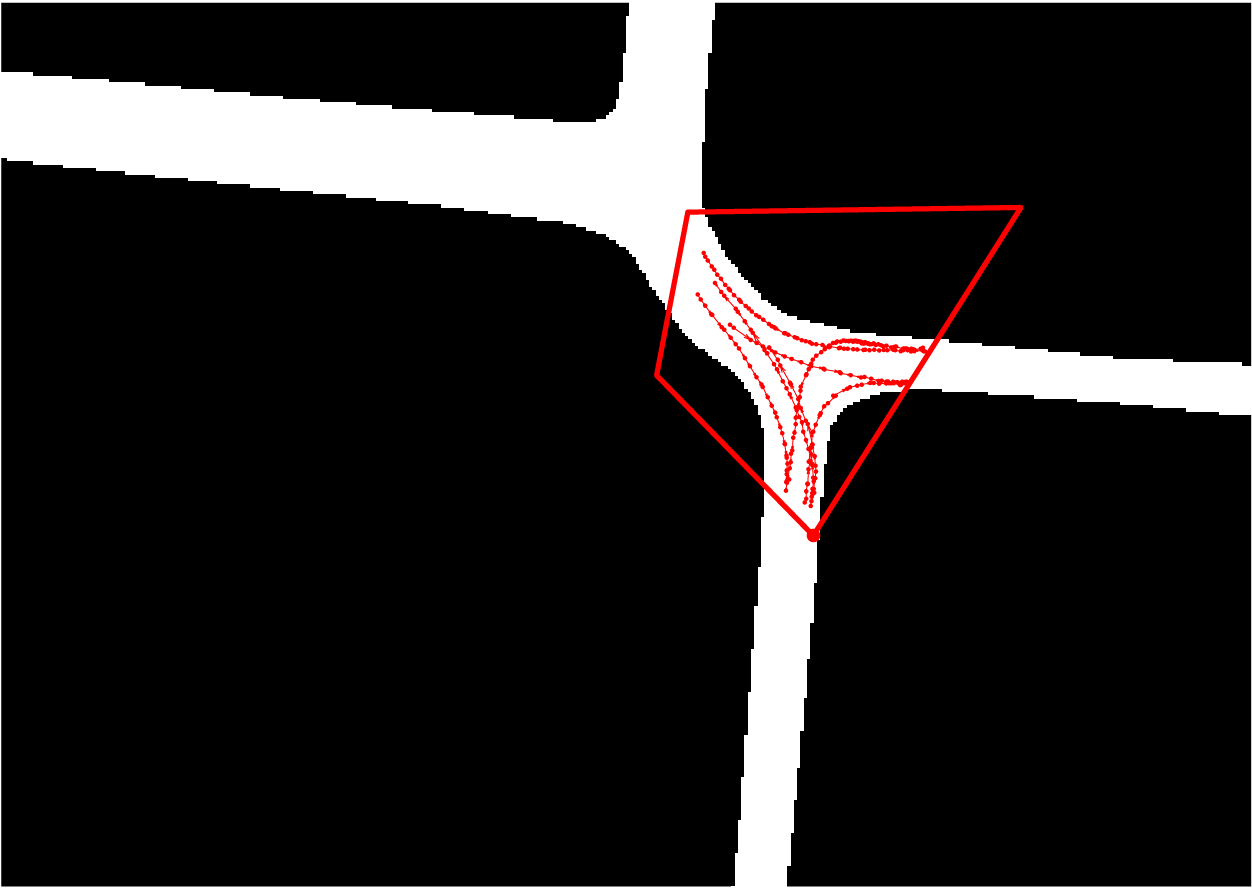

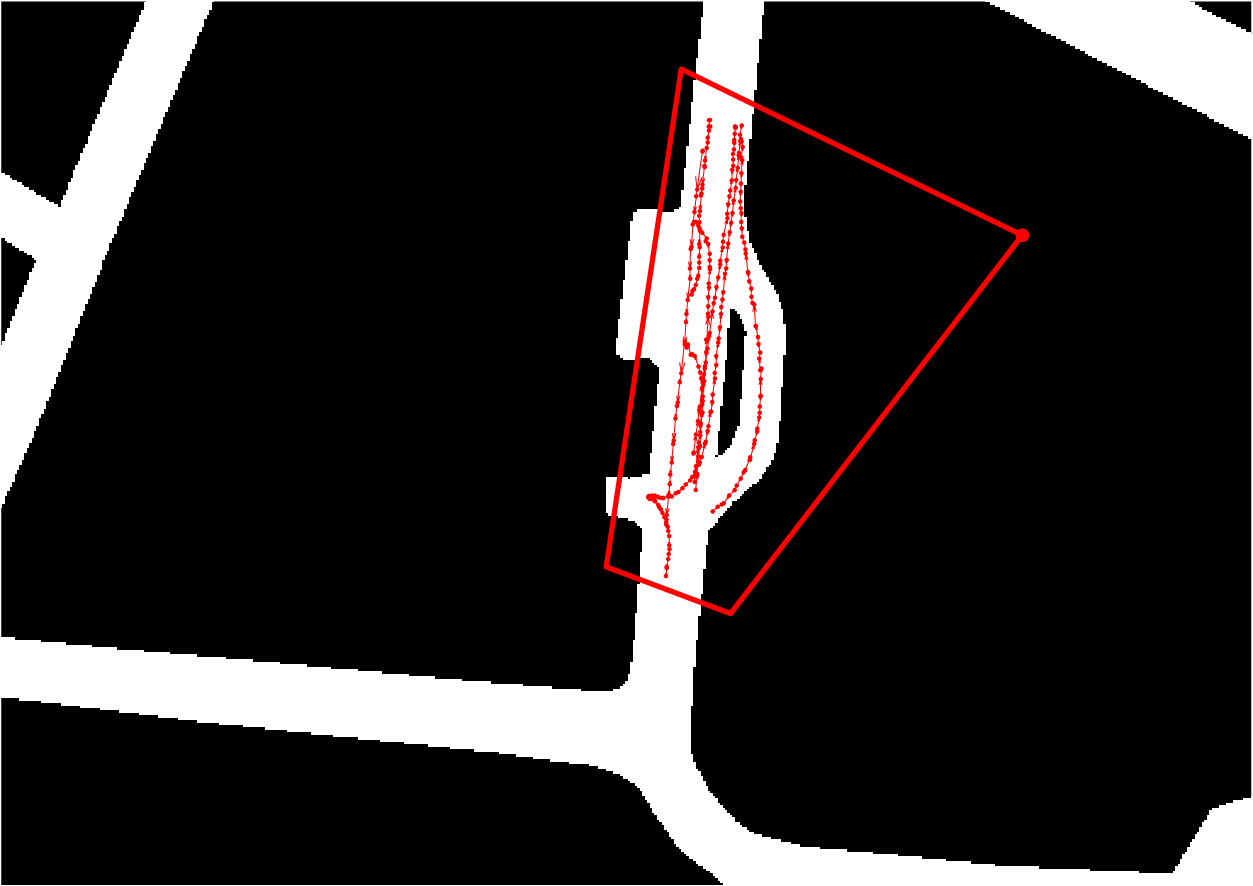

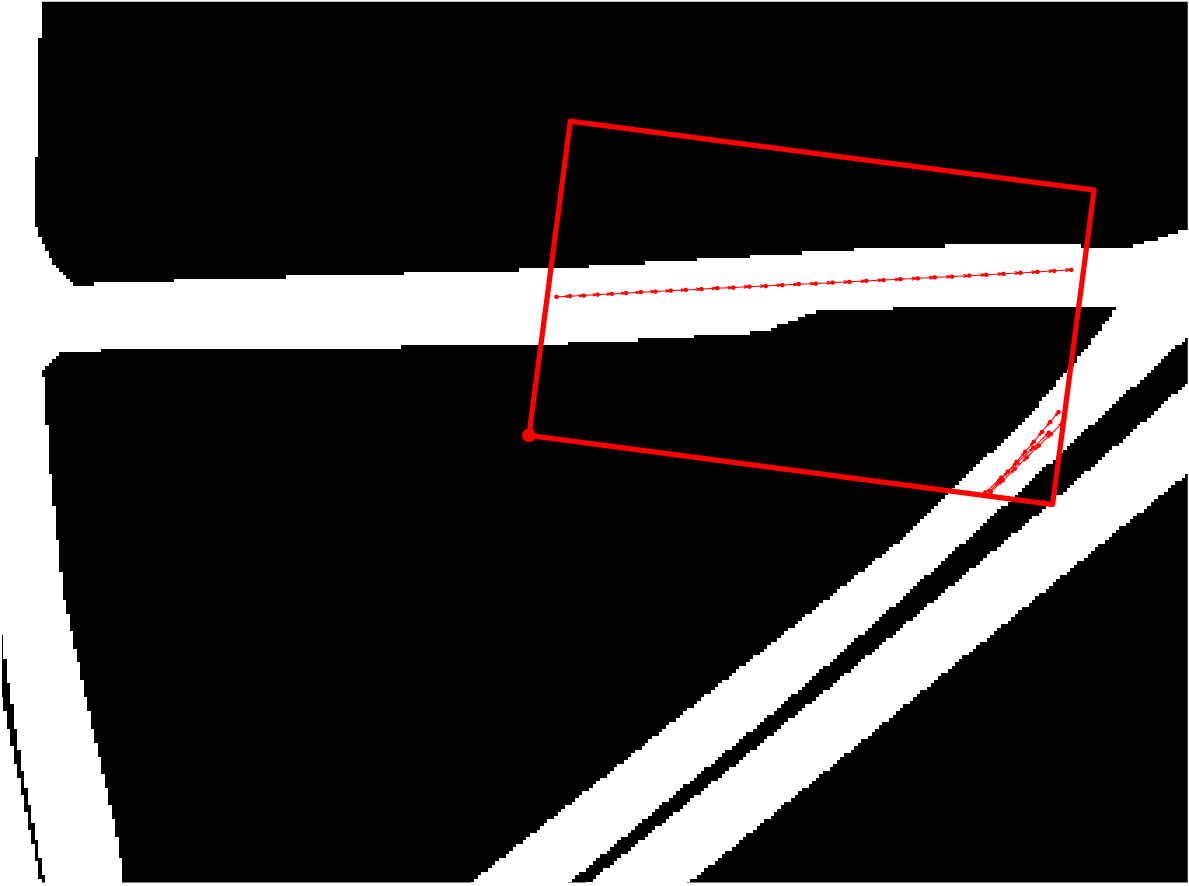

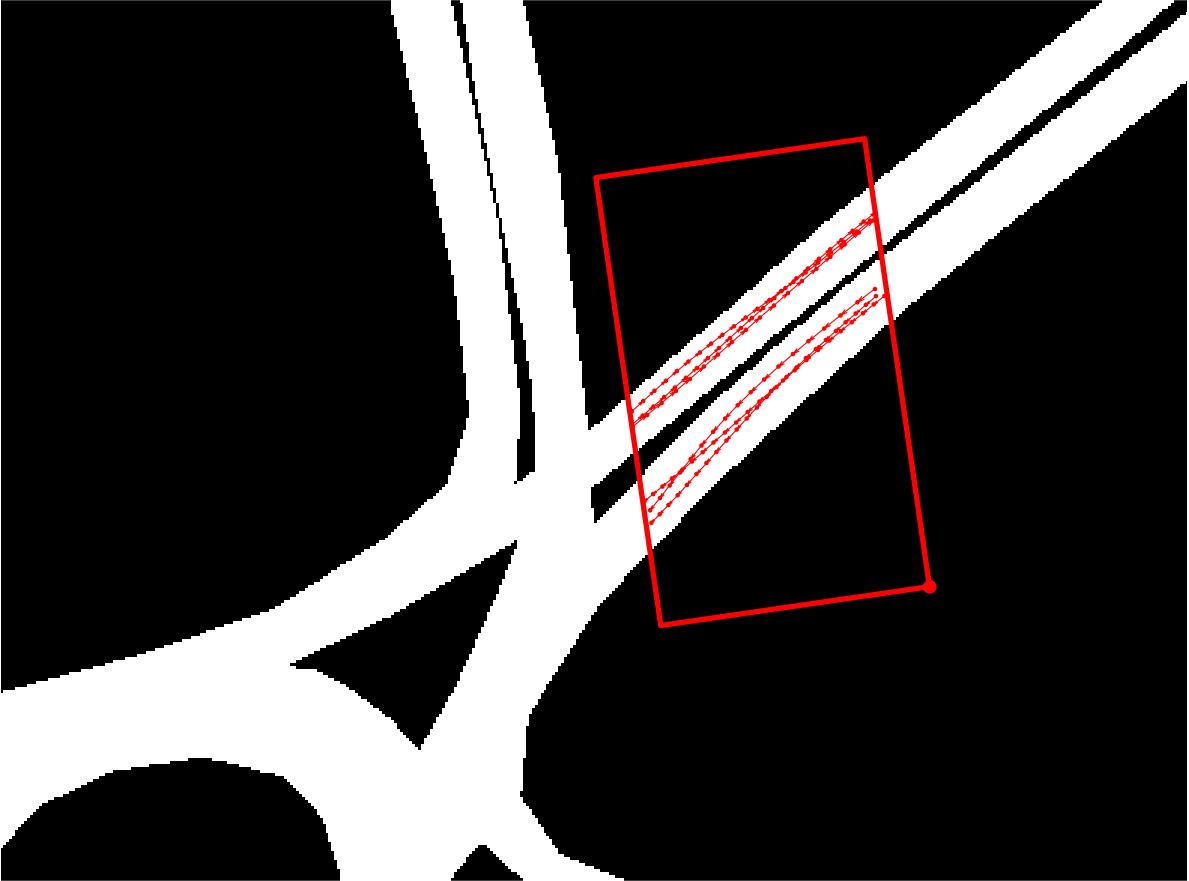

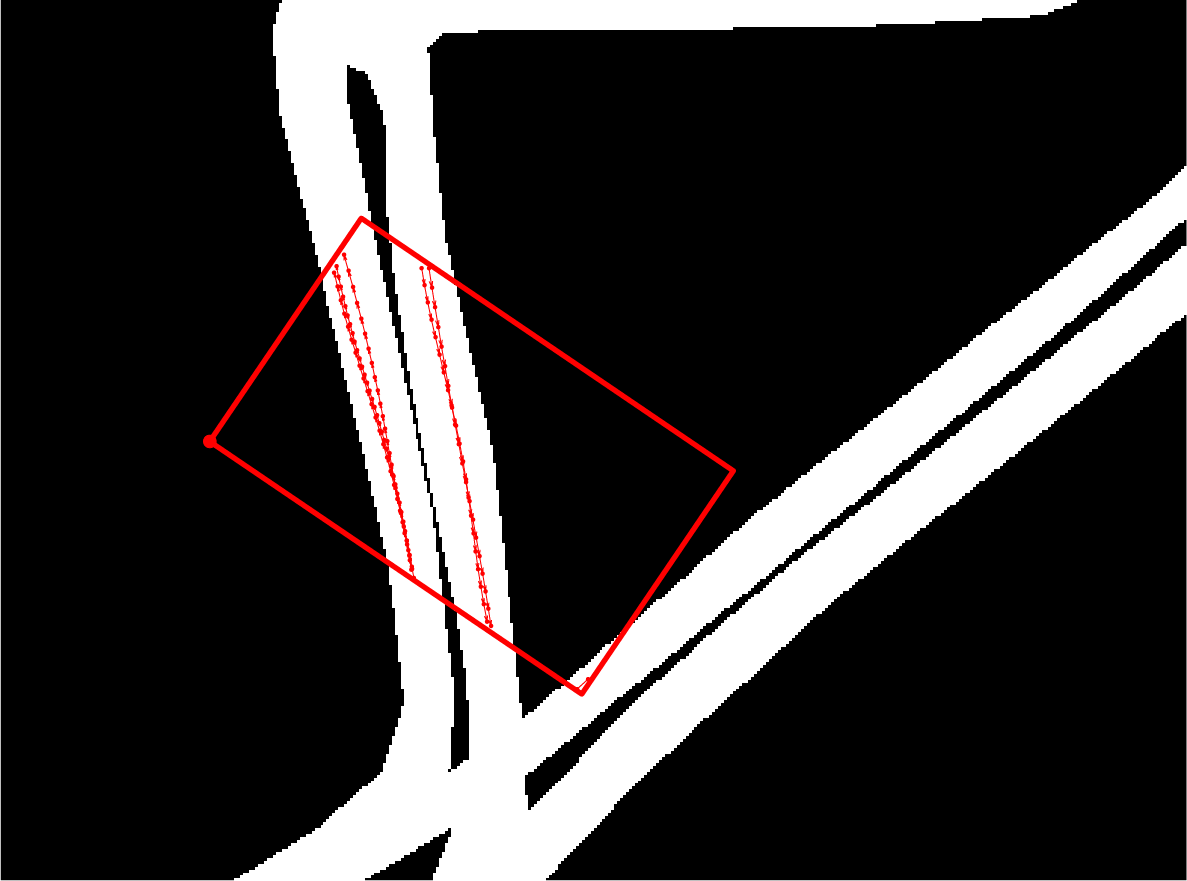

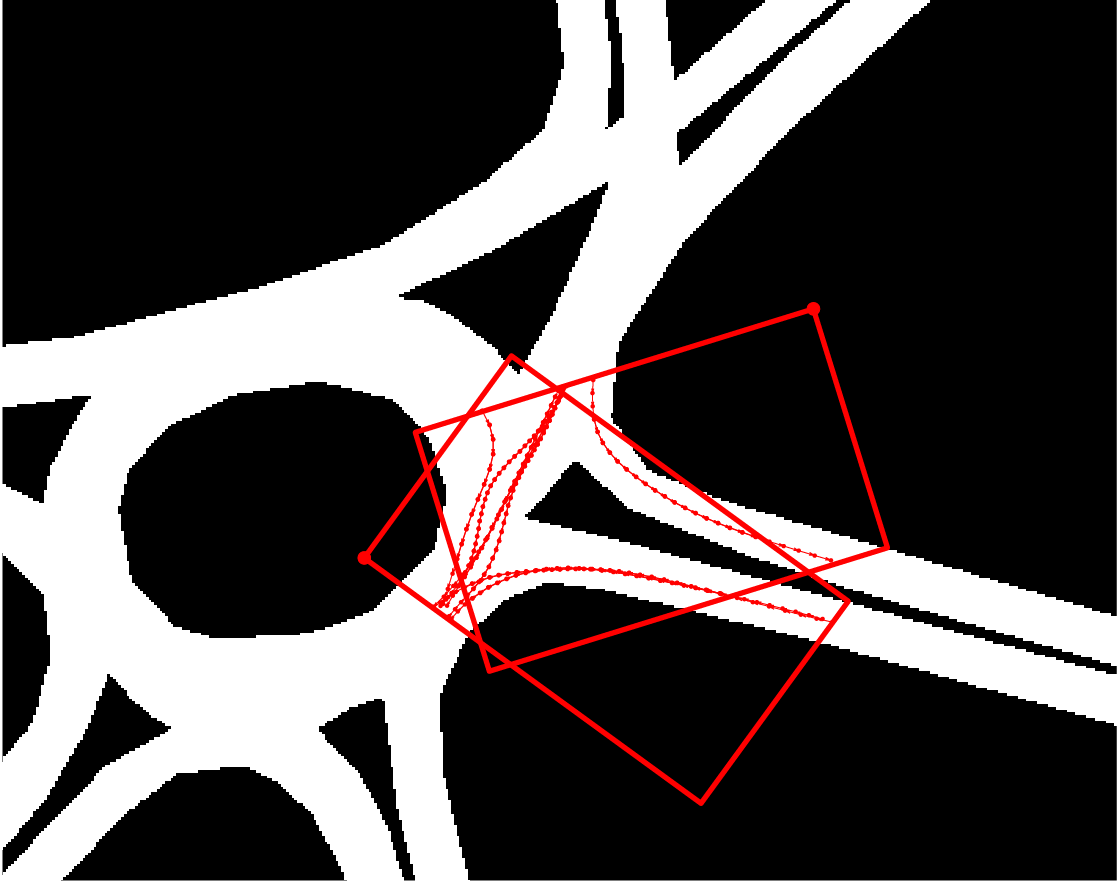

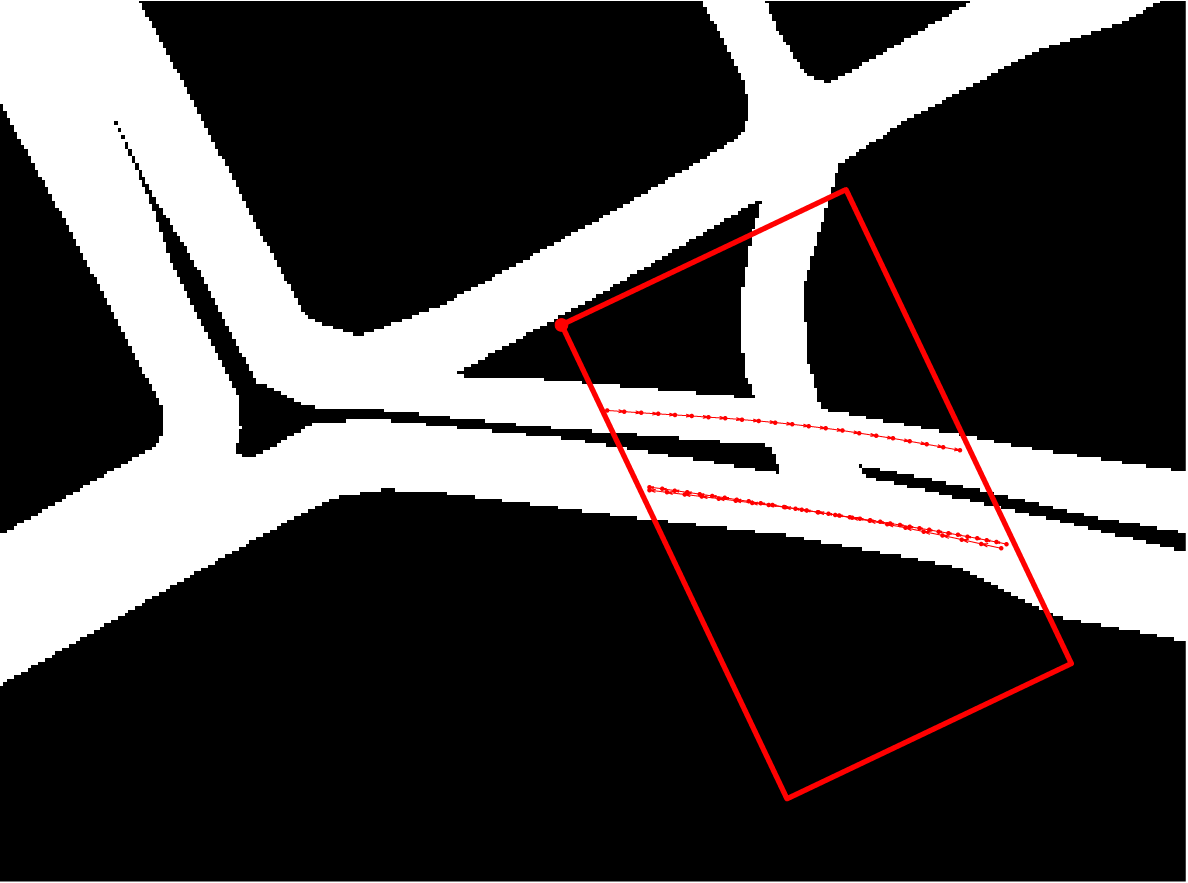

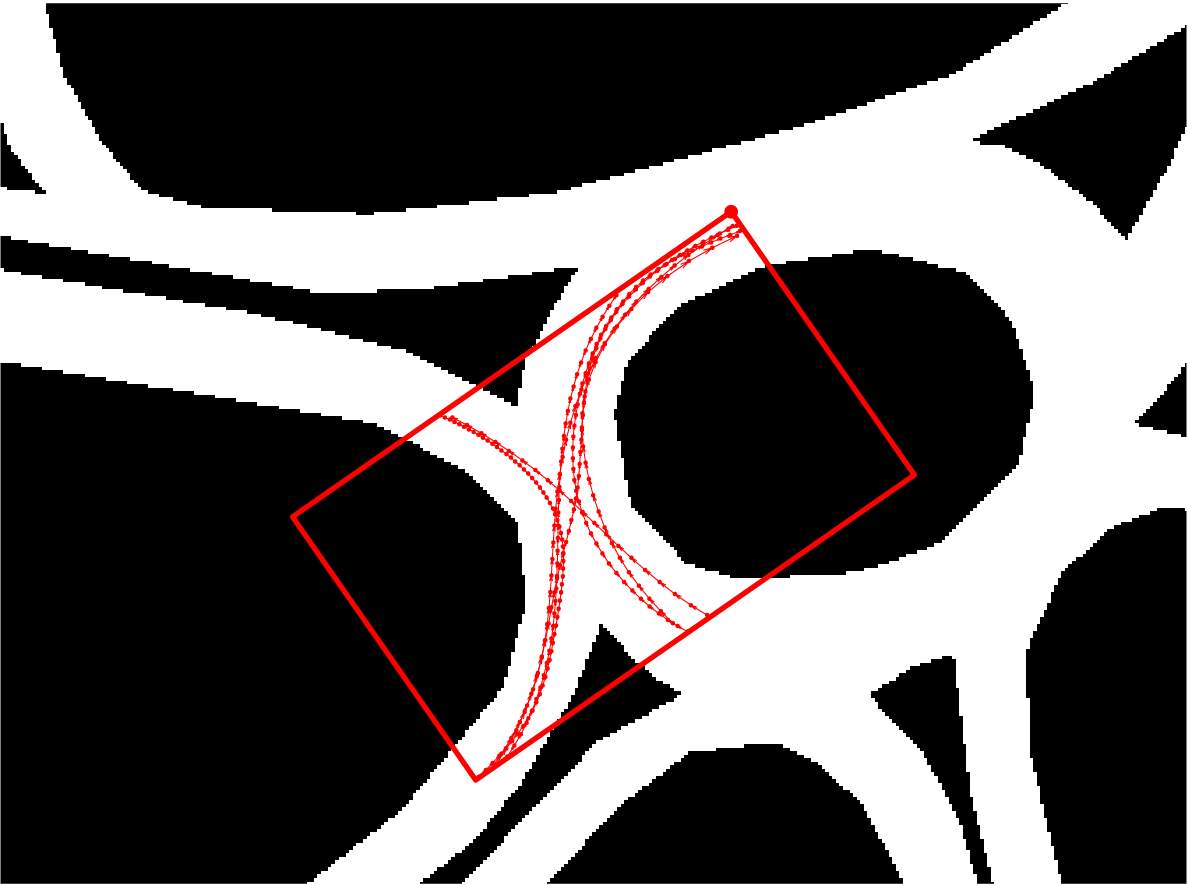

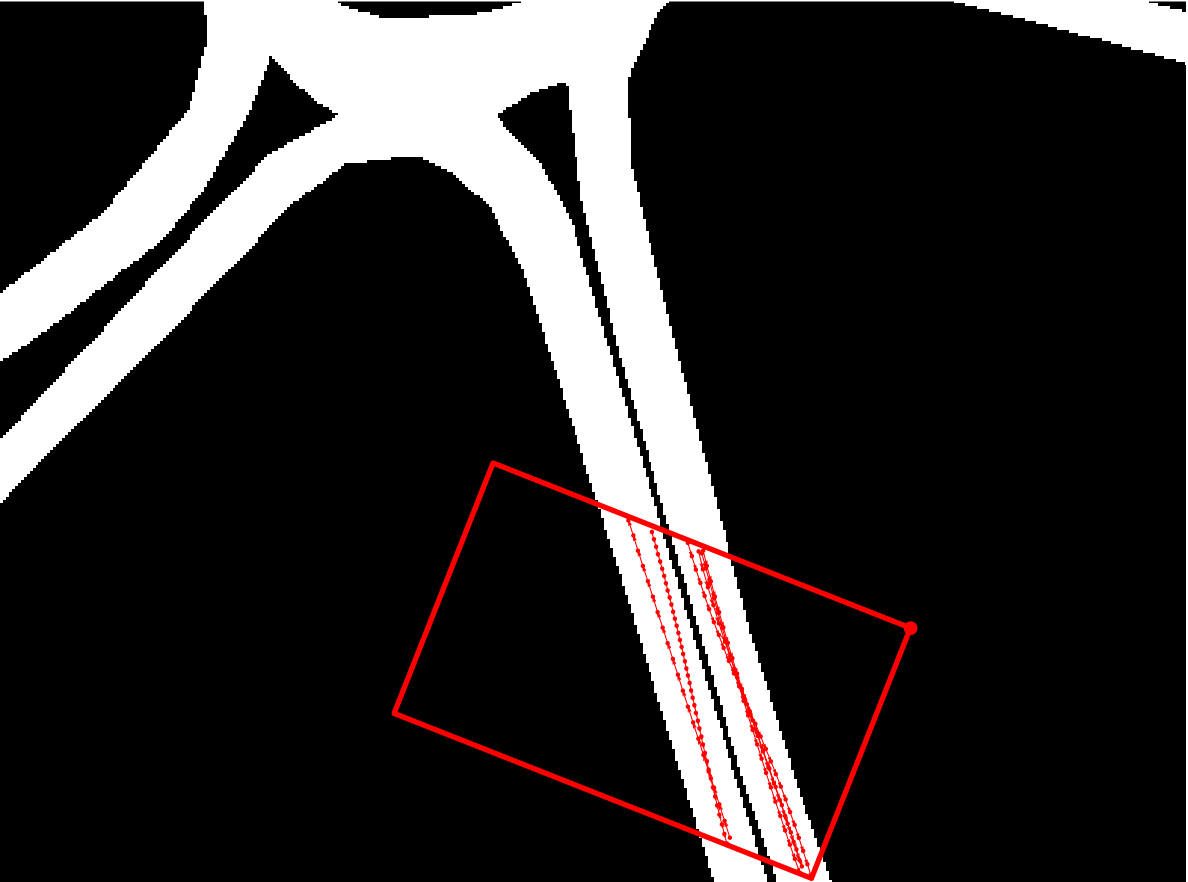

Closeup of the true camera FoVs and observed tracks (red) |

||

|

|

C3 |

C4 |

C5 |

C6 and C7 |

C8 |

C9 |

C10 |

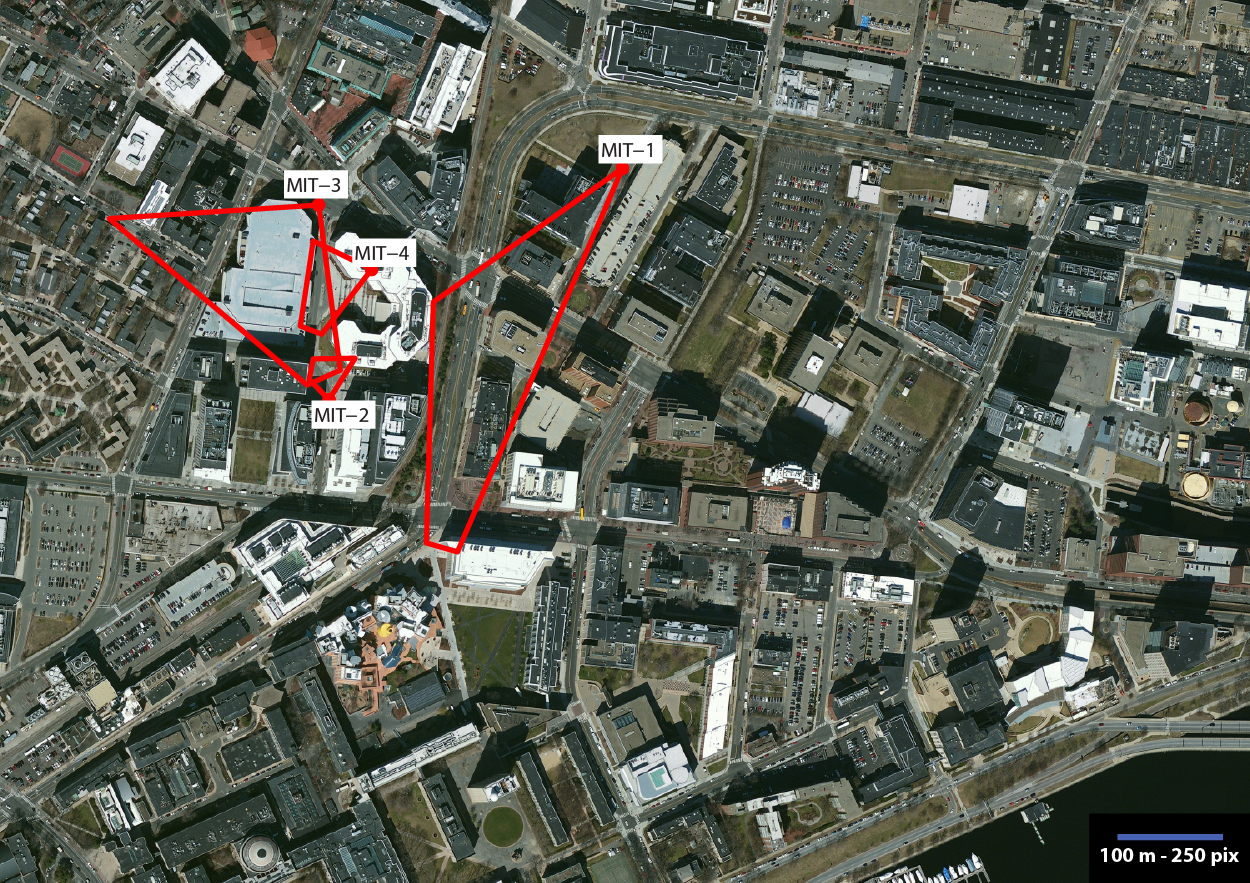

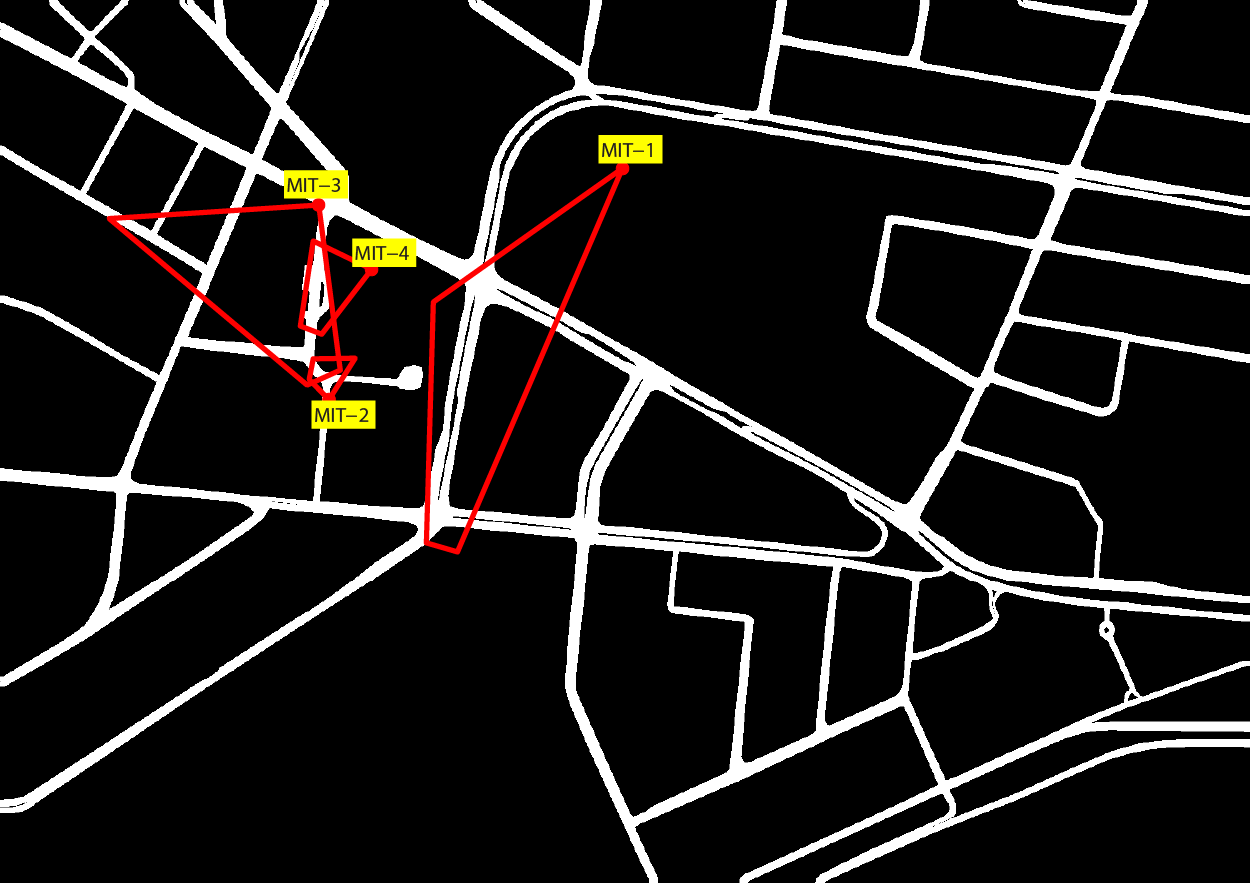

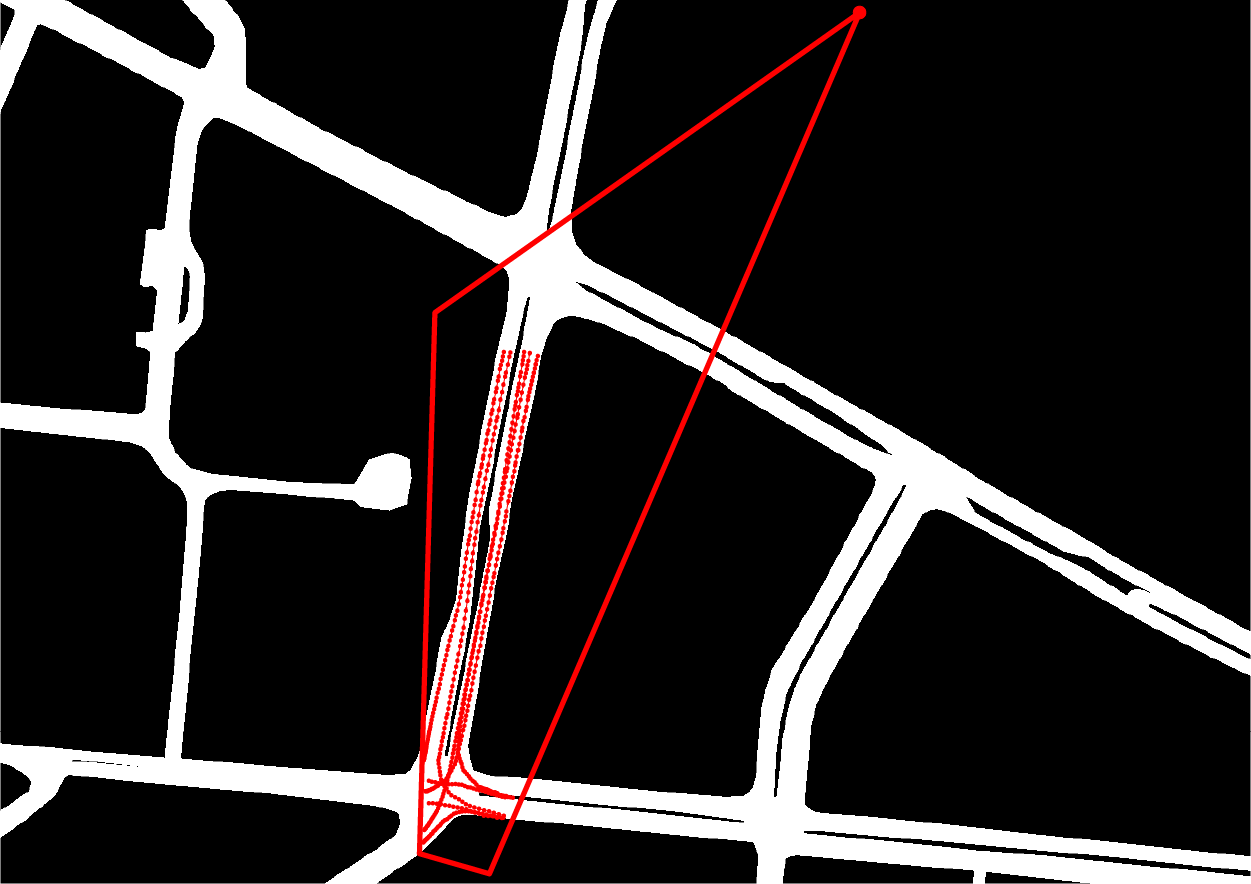

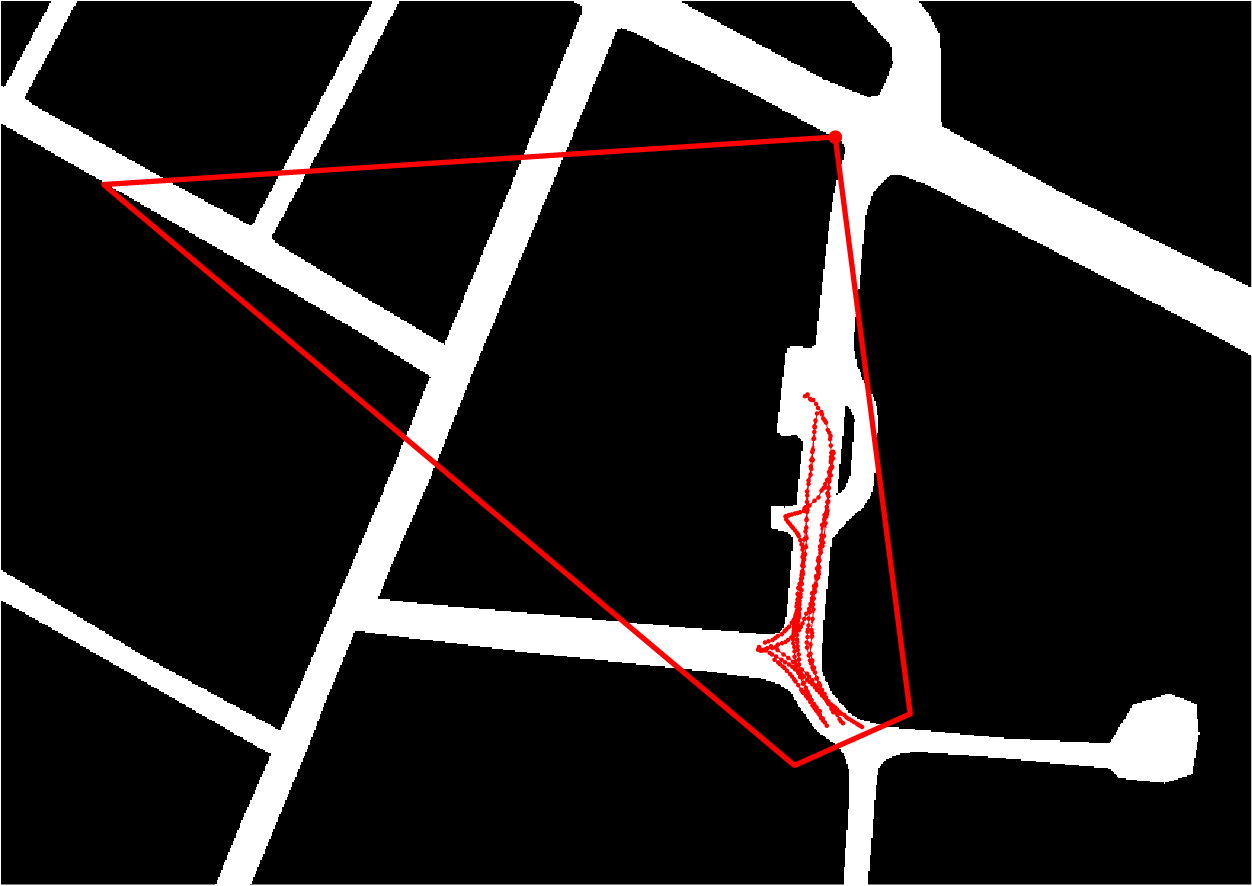

Real vehicle database (cameras MIT-1 to MIT-4):

- Real map of Massachusetts Institute of Technology area, Cambridge, MA (USA)

- 1200 x 850 m

- Resolution: 0.4 m/pix

- 4 real camera for urban traffic monitoring

- Metric rectification homography manually estimated (DLT algorithm): resulting FoVs, arbitrary quadrilaterals

- MIT-1:

- Adapted from the MIT Traffic Data Set [1]

- Original observed tracks: 93 manually labeled real vehicle trajectories at 29 fps

- Retained (after [3]): 8 representative tracks at 4.83 fps (1/6 of the original framerate)

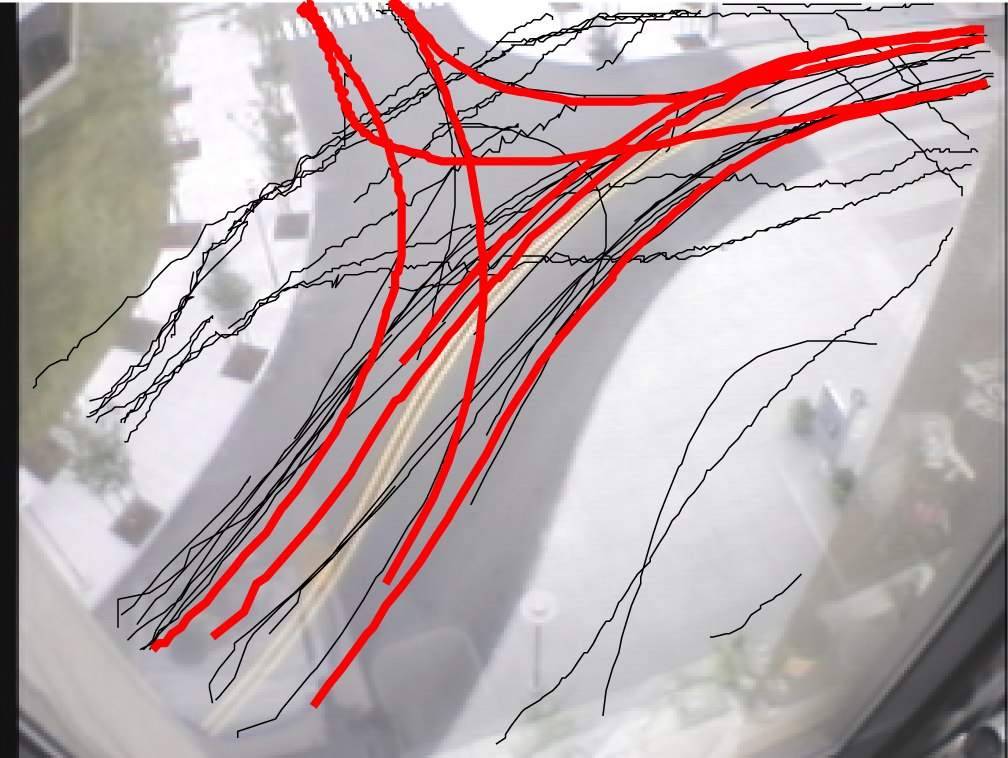

- MIT-2, 3 and 4:

- Adapted from the MIT Trajectory Data Set [2]

- Original observed tracks: respectively, 4025, 4096 and 5753 non-uniformly sampled real trajectories, comprising both traffic and pedestrians

- Retained (after [3]): respectively, 7, 10 and 9 vehicle tracks resampled at 6 fps

Complete environmental map with true camera FoVs superimposed |

||

|

||

|

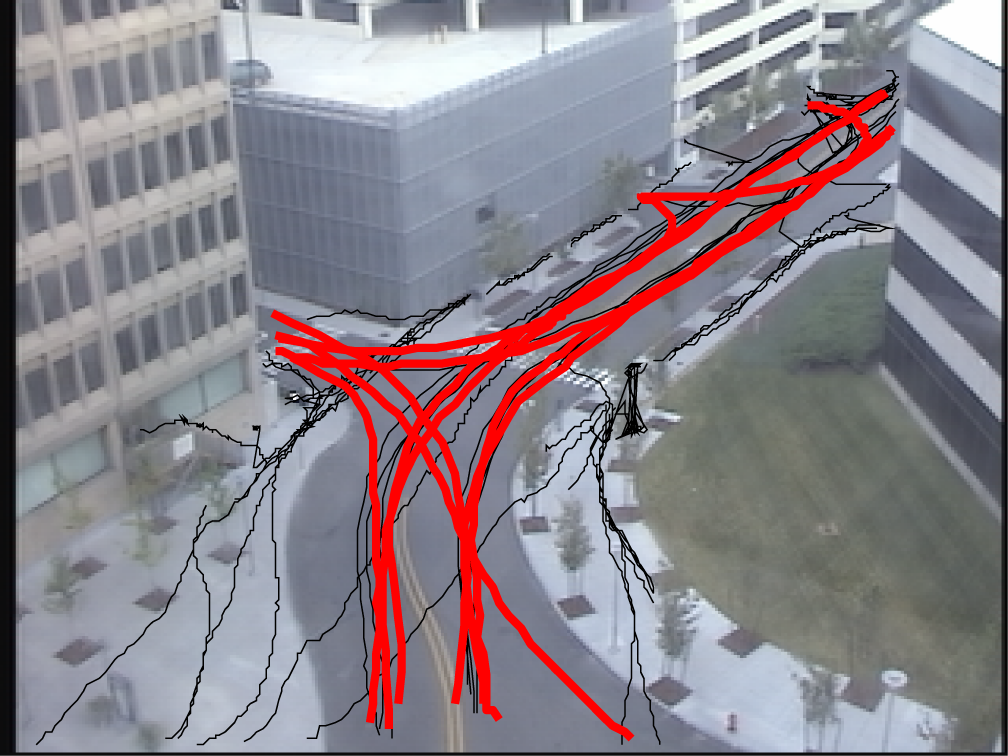

||

Original camera view and metrically rectified view, with FoV and retained tracks (red) and (part of the) original tracks (black) superimposed |

||

|

|

|

|

|

|

|

|

|

|

|

|

- X. Wang, X. Ma, E. Grimson, “Unsupervised activity perception in crowded and complicated scenes using hierarchical Bayesian models”, IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 31, no. 3, pp. 539-555, 2009.[Link]

- X. Wang, K. Tieu, E. Grimson, “Correspondence-free activity analysis and scene modeling in multiple camera views”, IEEE Trans. Pattern Analysis and Machine Intelligence, vol. 32, no. 1, pp. 56-71, 2010.[Link]

- N. Anjum, A. Cavallaro, “Multifeature object trajectory clustering for video analysis”, IEEE Trans. Circuits and Systems for Video Technology, vol. 18, no. 11, pp. 1555-1564, 2008.

Grupo de Tratamiento de Imágenes (GTI), E.T.S.Ing. Telecomunicación

Universidad Politécnica de Madrid (UPM)

Av. Complutense nº 30, "Ciudad Universitaria". 28040 - Madrid (Spain). Tel: +34 913367353. Fax: +34 913367353