Location: Universidad Politécnica de Madrid (UPM) > Grupo de Tratamiento de Imágenes (GTI) > Data > TSLAB

TSLAB: Tool for Semiautomatic LABeling

TSLAB is an advanced and user friendly tool for fast labeling of moving objects in video sequences. It allows to create three kind of labels for each moving object: moving region, shadow, and occluded area. Moreover, it assigns global identifiers at object level that allow to track labeled objects along the sequences. Additionally, TSLAB provides information about the moving objects that are temporally static.

A very friendly graphical user interface allows to manually create labels in a very easy way. Additionally, this interface includes some semiautomatic advanced tools that significantly simplify the labeling tasks and reduce drastically the time required to perform such tasks:

- Labeling from a previously created label: This is very useful in sequences with undeformable moving objects or with slow moving objects since, in these situations, the moving objects slightly vary their shape from frame to frame.

- Labeling supported by background subtraction: TSLAB allows to automatically select the moving pixels of an image by comparing the pixels of such image with an automatically obtained background model. This is very helpful in images with moving objects that differ substantially from the background.

- Labeling supported by an active contours strategy: TSLAB includes a lightweight and high quality active contours algorithm that automatically fits the contour of a moving object from an initial approximation for such contour.

For questions about this software, please contact Carlos Cuevas at ccr@gti.ssr.upm.es.

Citation:

C. Cuevas, E.M. Yáñez, N. García, “Tool for semiautomatic labeling of moving objects in video sequences: TSLAB”, Sensors, vol. 15, no. 7, pp. 15159-15178, Jul. 2015. (doi: 10.3390/s150715159)

Download:

- TSLAB v1.0 (5.66 MB)

- TSLAB v2.0 (34 MB)

- User manual (downloadable soon).

User manual (descriptions and demos):

To install TSLAB, please complete the following steps:

- Click here to execute or download the newest TSLAB installer. You can also download previous versions of TSLAB in the download section.

- Execute the installer and follow the steps to install TSLAB.

- If the MCR (Matlab Compiler Runtime) corresponding to Matlab 2014R is not installed in your computer, it will be installed.

- Finally, execute TSLAB.exe, which will appear in your list of applications.

- Input: TSLAB takes as input uncompressed AVI video sequences. Additionally, to continue a labeling started in a previous run of the application, TSLAB also allows to load the directory with the metadata file and the ground-truth images described next.

- Output:

When you begin the labeling of a video sequence, the application requests to specify a folder to store the output ground-truth, which consists of the following files.

- Ground-truth images: 24bpp RGB BMP images associated to the labeled frames.

- Red channel: It is used to denote the pixels labeled as part of a moving object.

- Green channel: It is used to denote the pixels labeled as part of a shadow cast by a moving object.

- Blue channel: It is used to denote the pixels labeled as part of an occluded moving object.

Non-labeled pixels will be set with the value 0 in the three channels. Labeled pixels will have, in the corresponding channel, the image-level identifier assigned to the labeled object (these identifiers will have values from 1 to 255 and are assigned to the labels according to their creation order in each image). Let us consider some examples:

- A pixel set with the value (2,0,1) indicates that the second labeled object is occluding the first labeled object.

- A pixel set with the value (0,1,0) indicates the existence of a shadow cast by the first labeled object.

- A pixel set with the value (0,0,0) indicates that the pixel does not contain any labeling information.

- Metadata file: An M file (Matlab file) containing a summary of the labels and their characteristics. The details about the data contained in this file can be found here.

Saving options: In addition to the default output data described above, TSLAB allows to save the ground-truth data in many formats. Details on this appear in item 3.1.2 of this user manual.

- Ground-truth images: 24bpp RGB BMP images associated to the labeled frames.

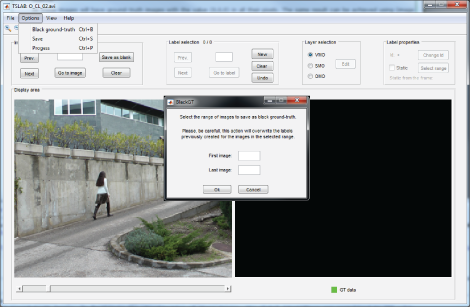

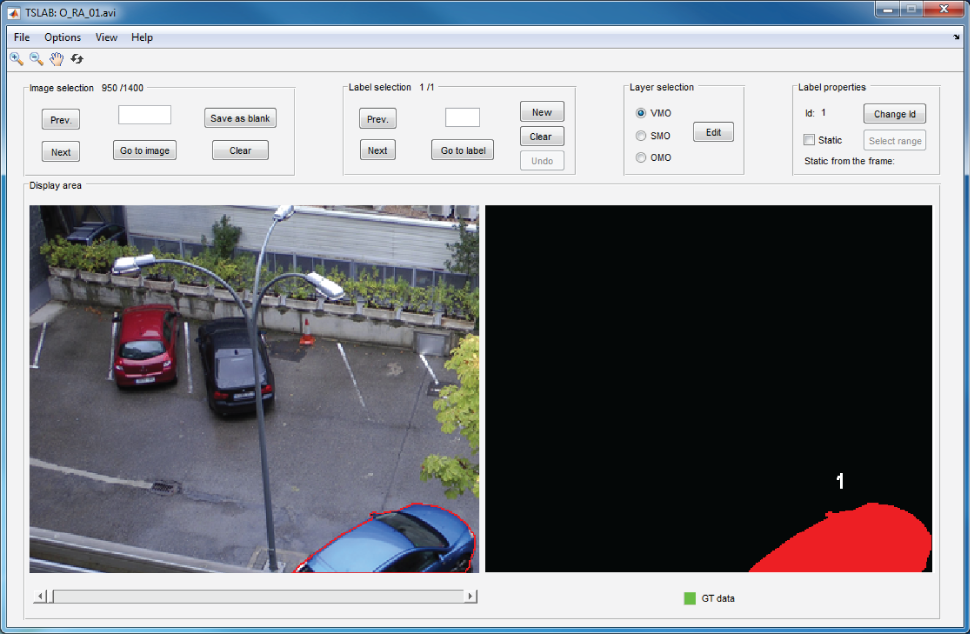

This window is the first window that appears when you run TSLAB. The following figure shows how it looks.

As it can be seen, this window contains a top menu and five panels: Image selection, label selection, layer selection, label properties, and display area. The functions of the top menu, and the five panels are described in the following items.

Additionally, this window also includes a toobar (behind the top menu) that allows to zoom, scroll, or reset the images shown in the display area.

The top menu in the "Navigation window" contains four items:

- File: It includes four sub-items that allow to load a new video sequence, load ground-truth data previously created, create new ground-truth data, and exit or reset the application. These sub-items are described in detail in point 3.1.1.

- Options: It includes three sub-items to save images as images without ground-truth, save the ground-truth in different formats, and show the ground-truth progress. These sub-items are detailed in point 3.1.2.

- View: It includes five sub-items that allow to select the data to visualize on the display area. These sub-items are detailed in point 3.1.3.

- Help: It includes a sub-item to visualize the information concerning the developers and a sub-item to show the usage rights. Details on these two sub-items appear in point 3.1.4.

This item contains the following five sub-items:

- Load video: It allows to load the AVI video sequence to label. When this sub-item is clicked, a dialog with the text "Do you want to load or to create the ground-truth data?" is shown.

- To start a new labeling from zero, press Create and select the path to store the ground-truth data.

- To continue or modify a previously started labeling, press Load and select the folder containing the labeling data.

- Load ground-truth: Once a video sequence has been loaded (regardless of whether you choose to create a new ground-truth or to use an existing one), this sub-item allows to load a ground-truth folder previously created with TSLAB.

- Create ground-truth: Once a video sequence has been loaded (regardless of whether you choose to create a new ground-truth or to use an existing one), this sub-item allows to create a new ground-truth folder.

- Reset: It reset the application.

- Exit: Use this sub-item to exit TSLAB.

Demo videos

Load a sequence and create new ground-truth data |

Load a sequence and a previously started ground-truth |

The "Options" item includes the following three subitems:

- Black ground-truth:

When this option is selected a dialog appears, where it is possible to select a range of images to label as images without moving objects.

All these images will have ground-truth images with the value (0,0,0) in all their pixels. The same result can be achieved using (image by image) the "Save as blank" button in the "Image selection" panel (see point 3.2).

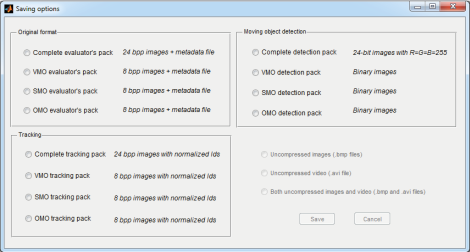

- Save:

This option allows to save the created ground-truth data in different formats. To do this, the window illustrated in the following figure appears, where three kinds of saving options can be seen:- Original format: To save the metadata file and the ground-truth images described above. In these images, instead the previously mentioned image-levels identifiers, the labels contain global identifiers (values from 1 to 255 assigned to the labeled objects in order of appearance in the sequence).

- Tracking: To save only the ground-truth images. To facilitate the visualization of these images, the global identifiers are normalized to 255.

- Detection: To save only pixel-level masks (typically used to assess the quality of moving object detection methods).

- Progress:

Select this option to display a window with the following data:

- Frames without ground-truth (amount and percentage).

- Frames with ground-truth (amount and percentage).

- Frames with black ground-truth (amount and percentage).

- Frames with nonblack ground-truth (amount and percentage).

- Total number of frames.

In this window, two sub-windows can also be seen, which allow to directly access to any of the images without ground-truth data (left sub-window) or any of the images with black ground-truth data (right window).

This item contains the following five sub-items:

- VMO: If marked, the VMO layers appear in the right image of the display area.

- SMO: If marked, the SMO layers appear in the right image of the display area.

- OMO: If marked, the OMO layers appear in the right image of the display area.

- Contour: If marked, the contours of the labels are represented on the left image of the display area.

- Id: If marked, the global identifiers (sequence-level identifiers) of the labels can be seen close to their corresponding labels.

This item contains the following two sub-items:

- About us: By clicking it a dialog appears showing the information concerning the developers. This dialog is shown in the figure below.

- License: Click this sub-item to see the usage rights of TSLAB (illustrated in the following figure).

The panel named "Image selection" allows the following actions:

- Select the image to work with: By entering its number into the white box and clicking the "Go to image" button.

- Go to the next image: By clicking the "Next" button.

- Go to the previous image: By clicking the "Prev." button.

- Label the current image as an image without labels (black RGB image): By clicking the "Save as blank" button.

- Erase the current labels in the image: By clicking the "Clear" button.

Demo video

The panel named "Label selection" allows to create, delete and select labels in the current image.

- "Prev.": Go to the previous label.

- "Next": Go to the next label.

- "Go to label": Go to the label indicated in the white box.

- "New": Create a new label.

- "Clear": Delete a label.

- "Undo": Undo the previous operation (for example, a non-desired delete action).

Demo videos

Select and delete labels |

Create a new label and associate it with an Id |

Create a new label with a new Id |

Create a new label and |

The panel named "Layer selection" allows to select the layer to edit (VMO, SMO or OMO). By clicking the "Edit" button, the labeling window (described in point 4) opens, where the selected label can be edited.

Demo video

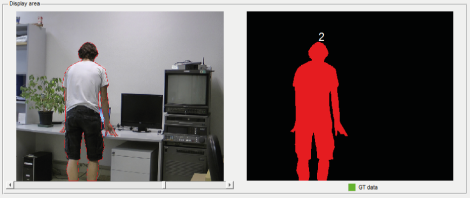

The panel named "Display area" shows the original images (in the left) and the images of labels (in the right). The content to visualize in these areas can be modified with the options in the item "View" in the top menu.

Finally, on the right-bottom side of this panel it is displayed a colored square together with text. If the current image has been labeled the color of the square is green and the text says "GT data". If the image has not been labeled the color is red and the text says "No GT data".

- rightarrow: Go to the next image in the sequence.

- letfarrow: Go to the previous image in the sequence.

- uparrow: Skip 10 images forward.

- downarrow: Skip 10 images backward.

- f5: Change from one label to another.

- f6: change from one layer to another.

- f7: Create a new label.

- f8: Remove the selected label.

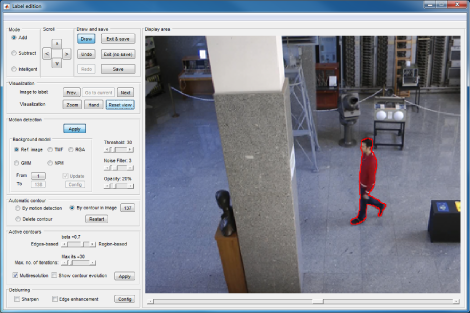

The "Labeling window", which is illustrated in the figure below, allows to create and edit the layers previously selected in the "Navigation window" (see point 3.4).

This window is formed by nine panels: "Display area", "Mode", "Scroll", "Draw", "Visualization", "Motion detection", "Automatic contour", "Active contours", and "Deblurring". The details concerning each panel are presented in the following points.

It shows the image lo label. The areas inside the contours drawn on this image are added or removed from the label, depending on the mode selected in the "Mode" panel (see point 4.2).

The contours can be created in several ways:

- By selecting points in the image (marked points are automatically stitched).

- By holding down the left button of the mouse and moving the mouse on the image.

- By combining the above two ways.

To finish a contour the right button of the mouse must be clicked. I the "Add" mode is selected, all the pixels inside the contour will be added to the label. If the "Subtract" mode is selected, all the pixels inside the contour will be removed from the label.

Demo video

This panel includes the following three options:

- Add: If selected, the area inside the contours drawn on the display area is added to the label.

- Subtract: If selected, the area inside the contours drawn in the display area is removed from the label.

- Intelligent: If selected, the area added to the label is the intersection of the area inside the contours manually drawn on the display area and those resulting from the application of the motion detection tool. Details concerning the combined use of the intelligent mode and the motion detection tool appear in point 4.6.

Demo video

The buttons in this panel allow to move (left, right, up or down) a previously drawn contour. This is very useful when a contour created in other image is used as starting point to create the current one.

Demo video

This panel contains the following buttons:

- Draw: Click this button to draw contours in the display area (this action is automatic when the modes "Add" or "Subtract" are selected).

- Undo: It allows to undo undesired operations (e.g. redrawing a contour accidentally deleted).

- Redo: Click to redo the previous undo operation.

- Exit & save: For saving the work and exit the window (return to the "Navigation window").

- Exit (no save): To exit the window without saving the work.

- Save: For saving the work done so far.

This panel allows the following actions:

- Zoom: To enlarge or reduce the image size.

- Hand: To scroll the image.

- Reset view: To return to the initial view.

- Prev.: To go to the previous image.

- Next: To go to the subsequent image.

- Go to current: to return to the current image.

When labeling a moving object, sometimes it is not easy to deduce whether an image area belongs to such moving object or to the background. Moreover, it is also difficult to maintain the consistence in labeling along a video sequence (i.e. to take the same criteria for labeling a particular object in different images). However, these problems are simplified thanks to the buttons "Prev." and "Next", which allow to view previous and subsequent images. The video above illustrates a situation in which the use of these buttons is very useful.

Demo video

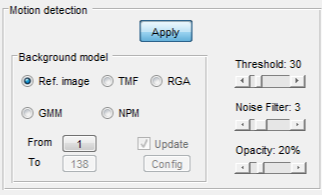

This panel can be used to estimate a background model to compare with the current image in order to identify the moving objects.

TSALB can obtain the background model in five ways:

- Using another image as background:

- Select the option "Ref. image".

- Introduce the image to use in the box "From".

- Click "Apply" to see the result on the display area.

- Applying a temporal median filtering [1]:

- Select the check-box "TMF".

- Introduce the range of images used to construct the background model (in the boxes "From" and "To").

- Click "Apply" to see the result on the display area.

- Applying the Running Gaussian Average method [2]:

- Select the check-box "RGA".

- Introduce the range of images used to construct the background model (in the boxes "From" and "To").

- Click "Config" to modify the default parameters (more information about these parameters can be found in [2]).

- Click "Apply" to see the result on the display area.

- NOTE: The first time this method is executed, an initial background model is created. For subsequent images, if the "Update" checkbox is marked, the initial model will be updated. On the other hand, if "Update" is not marked, a new model will be created.

- Using Gaussian Mixture Models [3]:

- Select the check-box "GMM".

- Introduce the first image to use to construct the background model (in the box "From").

- Click "Config" to modify the default parameters (more information about these parameters can be found in [3]).

- Click "Apply" to see the result on the display area.

- NOTE: The first time this method is executed, an initial background model is created. For subsequent images, if the "Update" checkbox is marked, the initial model will be updated. On the other hand, if "Update" is not marked, a new model will be created.

- Using a nonparametric modeling [4]:

- Select the check-box "NPM".

- Introduce the first image to use to construct the background model (in the box "From").

- Click "Config" to modify the default parameters (more information about these parameters can be found in [4]).

- Click "Apply" to see the result on the display area.

- NOTE: The first time this method is executed, an initial background model is created. For subsequent images, if the "Update" checkbox is marked, the initial model will be updated. On the other hand, if "Update" is not marked, a new model will be created.

Once clicked the "Apply" button, if "Ref. image" or "TMF" was the selected option, the mask that is shown on the display area is the result of the absolute difference between the current image and the background model, thresholded by the value indicated in the first bar in the right of the panel ("Threshold"). The differences higher than the threshold are set as 1, while the rest of differences are set as 0.

Finally, to delete small regions and to make more compact the detected objects, an opening morphology operation followed by a closing morphology operation are applied on the binary mask. The structuring element used to perform these operations can be modified with the bar named "Noise Filter". If the moving object to segment is small this value must be also small, but for large moving objects it can be higher.

The last bar in the panel ("Opacity") allows to determine the opacity level of the color used to represent the mask on the current image.

The following videos show some examples of use of this panel:

- The first one shows how to use all the functionalities in the panel.

- The second one is an example of labeling by combining the motion detection and the intelligent pencil.

Demo videos

Functionalities in the "Motion detection" panel |

Labeling by combining the motion detection and |

By using the options in this panel it is possible to get an initial contour that can be refined or displaced to arrive to the target contour. It offers three options:

- By motion detection: If this option is selected, the initial contour is that obtained from the mask of differences resulting from the use of the background model obtained according to the configuration of the "Motion detection" panel.

- By contour in image: If it is selected, the initial contour is the contour created for the image indicated in the box located to the right.

- Delete contour: This option delete the contours in the image.

In addition, the "Restart" button reverses the changes applied on the initial contour so as to start from zero.

The following videos show two examples of use of this panel:

- The first one shows how to segment an object by starting from the contour created for the previous image.

- The second one illustrates how to start the labeling with a contour created automatically from a mask of differences.

Demo videos

Automatic contour from a previous labeling |

Automatic contour from the mask of differences |

This panel can be used to configure and use a fast and very high-quality active-contours strategy that allows to automatically label an object from an initial contour around it. The panel contains the following elements:

- Beta parameter: It can be set with values between 0 and 1, and defines the contribution of each kind of energy (edge-based and region-based) used by the algorithm.

- Maximum number of iterations: It can be set between 1 and 400. If the starting contour is far from the target contour this value must be high. However, the speed of the algorithm decreases as the number of iterations increases.

- Multiresolution: If this check-box is enabled the computational cost of the algorithm will decrease considerably. However, unchecking this box the quality of the results could be improved in some situations (e.g. in scenarios with complex background, composed by many small regions).

- Show contour evolution: If it is checked, the application will show the evolution of the contour (in this case the speed of the algorithm also decreases).

These parameters have been set with those default values that usually offer the best results. However, they can be modified by the user to try to improve such results.

For more information on how to use these parameters, please see reference [5].

Demo video

The options in this panel allows to enhance the edges of the objects, which facilitates the labeling task when the image is blurred. An example of it use can be seen in the following image.

The panel allows to apply two methods to deblur the images:

- Sharpen: An unsharp masking method is applied to the original image. The result will be an enhanced version of the image. This method depends on two parameters that can be modified by clicking the "Config" button.

- Radius: Standard deviation of the applied Gaussian lowpass filter.

- Amount: Strength of the sharpening effect.

- Edge enhancement: By selection this option, the edges of the image are found and they are superimposed to the image in the display area. This method depends on the following parameters (that can be modified by clicking the "Config" button):

- Method: The user can choose between Sobel, Prewitt, Roberts, Laplacian of Gaussian, or Canny.

- Theshold: Sensitivity method for the selected method.

- ctrl + z: Use the zoom on the image.

- ctrl + h: Use the hand (scroll) on the image.

- crtl + d: Start drawing.

- a: Select the "Add" mode.

- s: Select the "Subtract" mode.

- i: Select the "Intelligent" mode.

- backspace: Undo the previous operation.

- e: Exit and save.

References:

[1] B. Lo and S. Velastin, "Automatic congestion detection system for underground platforms", IEEE Int. Symp. Intelligent Multimedia, Video and Speech Processing. pp. 158-161, 2001 (doi: 10.1109/ISIMP.2001.925356).

[2] C. R. Wren, A. Azarbayejani, T. Darrell, and A. P. Pentland, "Pfinder: Real-time tracking of the human body", IEEE Trans. Pattern Analysis and Machine Intelligence, no. 19, pp. 780-785, 1997 (doi: 10.1109/34.598236).

[3] C. Stauffer, and W. E. L. Grimson, "Adaptive background mixture models for real-time tracking", IEEE Conf. Computer Vision and Pattern Recognition, vol. 2, pp. 246-252,1999 (doi: 10.1109/CVPR.1999.784637).

[4] C. Cuevas, N. García, “Improved background modeling for real-time spatio-temporal non-parametric moving object detection strategies”, Image and Vision Computing, vol. 31, no. 9, pp. 616-630, Sep. 2013 (doi:10.1016/j.imavis.2013.06.003).

[5] E.M. Yanez, C. Cuevas, N. García, “A Combined Active Contours Method for Segmentation using Localization and Multiresolution”, IEEE Int. Conf. on Image Processing, ICIP 2013, Melbourne, Australia, pp. 1257-1261, 15-18 Sep. 2013 (doi: 10.1109/ICIP.2013.6738259).

Grupo de Tratamiento de Imágenes (GTI), E.T.S.Ing. Telecomunicación

Universidad Politécnica de Madrid (UPM)

Av. Complutense nº 30, "Ciudad Universitaria". 28040 - Madrid (Spain). Tel: +34 913367353. Fax: +34 913367353